Navigating AI's Impact on Social Interactions, Creative License and Policy

FM (Friday Morning) Reflection #27

In this series

The lines between human and AI-augmented content become more difficult to discern as generative AI is integrated into the work of content creation.

From AI-generated hosts to commercial production automation, the use of digital avatars to represent real people, and even the potential to create posthumous celebrity appearances, AI's influence is rapidly growing. It's clear to me that we need a new framework to guide the creation of content in today's AI-powered mediascape and to develop a how-to guide for consumers.

This is not a one-and-done kind of thing, and to push the conversation forward, this is the third in a series of FM (Friday Morning) Reflections where I explore the issues and tensions that come with the integration of AI in our media ecosystem, with the end goal of developing a framework for AI literacy.

This week, I examine and unpack the issues in three new areas:

Human vs. Bot Interaction

Creative License vs. Copyright and Ownership

Corporate vs. Public Policy

Human vs. Bot Interaction

Social media was originally seen as a tool for fostering genuine human connection with the use of digital technology — a virtual space for sharing experiences and building relationships.

However, the rise of AI-generated content is rapidly transforming this landscape, blurring the lines between human and machine interaction. As bots and automated accounts flood social platforms with posts and comments, it becomes increasingly difficult to discern authentic human voices from synthetic ones.

The value of social platforms diminishes when we can no longer be sure if we're engaging with a real person or an AI-powered persona.

The constant bombardment of AI-generated content can also lead to information overload and a decline in the quality of online discourse. Moreover, the inability to distinguish between human and bot interactions creates opportunities for manipulation and misinformation, as malicious actors can exploit AI-generated content to spread propaganda, sow discord, or influence public opinion. This undermines trust in social platforms and erodes the foundation of genuine human connection they were built upon.

There are legitimate uses for AI-powered automation — like the refactoring of news headlines and summaries in a news feed by a publisher or to facilitate the creation of promotional notes to inform consumers about new content.

But at this point, my view is that the presence of bots that manipulate narratives or spread disinformation on social networks has destroyed much of their original value proposition.

Creative License vs. Copyright and Ownership

The democratization of creative tools through AI is revolutionizing the way we produce content but who owns the rights to AI-generated content? The answer is not always clear-cut and the legal landscape is still evolving to address these challenges.

The U.S. Copyright Office has acknowledged the role of AI in the creative process while maintaining that human creativity remains central to copyright protection. On January 29, 2025, the Office released part 2 of 3 of its report on copyright and artificial intelligence, which "confirms that the use of AI to assist in the process of creation or the inclusion of AI-generated material in a larger human-generated work does not bar copyrightability."

The key word is assist and the report goes on to clarify that content generated purely based on AI and without human control is not eligible for copyright protection.

This guidance marks substantial progress and now we can turn our attention to developing guidelines for creators, editors, and publishers.

Corporate vs. Public Policy

AI presents a challenge for both corporations and policymakers. Companies are eager to leverage AI's potential to innovate and improve their products and services, while many in society expect safeguards to ensure AI is used ethically and responsibly. This tension between corporate policy and public policy raises questions about where to draw the line between innovation and regulation.

On one hand, companies have a responsibility to self-regulate their use of AI, developing ethical guidelines and best practices to ensure their AI systems are fair, unbiased, and transparent. This includes being mindful of potential risks, such as privacy violations, discrimination, and the appropriate use of customer data.

On the other hand, there's a growing need for public policy to provide a framework for responsible AI development and deployment. This could include regulations on data privacy, algorithmic transparency, and the use of AI in sensitive areas like healthcare and education. Many U.S. states and municipalities have already started to enact regulations on this front in the absence of clear guidance at the national level.

The challenge lies in finding the right balance between fostering innovation and protecting the public interest. Overly restrictive regulations could stifle AI development and hinder its potential benefits, while a lack of oversight could lead to harmful consequences.

But even this framing is too simplistic and ignores the biggest driver of product and policy in the marketplace — what we choose as consumers and buyers of technology. This is by far the most influential force on feature sets, product lines, capital investment, and ultimately the behavior of both commercial and regulatory interests.

A collaborative approach is needed, with companies, policymakers, and the public working together to shape the future of AI. This includes open dialogue, transparency about AI development and deployment, and willingness to adapt regulations as AI technology continues to evolve. And as consumers and buyers, we must demand products that provide for the safe use of AI while we innovate.

By striking a balance between corporate policy and public policy, we can harness the transformative power of AI while ensuring it is used in a way that benefits society as a whole.

Finding Our Way Forward

Each of these tensions—human vs. bot interaction, creative license vs. copyright, and corporate vs. public policy—present a complex web of opportunities and challenges, forcing us to rethink our understanding of creativity, authenticity, and human interaction.

As we navigate this evolving landscape, it's crucial to embrace AI literacy, and for my part, these Reflections are intended to develop the knowledge and skills we need to critically evaluate AI-generated content and make informed decisions about its use. This includes understanding the capabilities and limitations of AI, recognizing the ethical implications of its use, and developing strategies for preserving human connection and authenticity in a digital world increasingly influenced by AI.

By fostering open dialogue, promoting transparency, and establishing ethical guidelines, we can harness the transformative power of AI while mitigating its potential risks.

The journey towards AI Literacy is an ongoing one, requiring continuous learning and adaptation as AI technology continues to evolve. By embracing this challenge, we can ensure that AI serves as a tool for progress, empowering us to create a more informed, equitable, and connected world.

Have a great weekend!

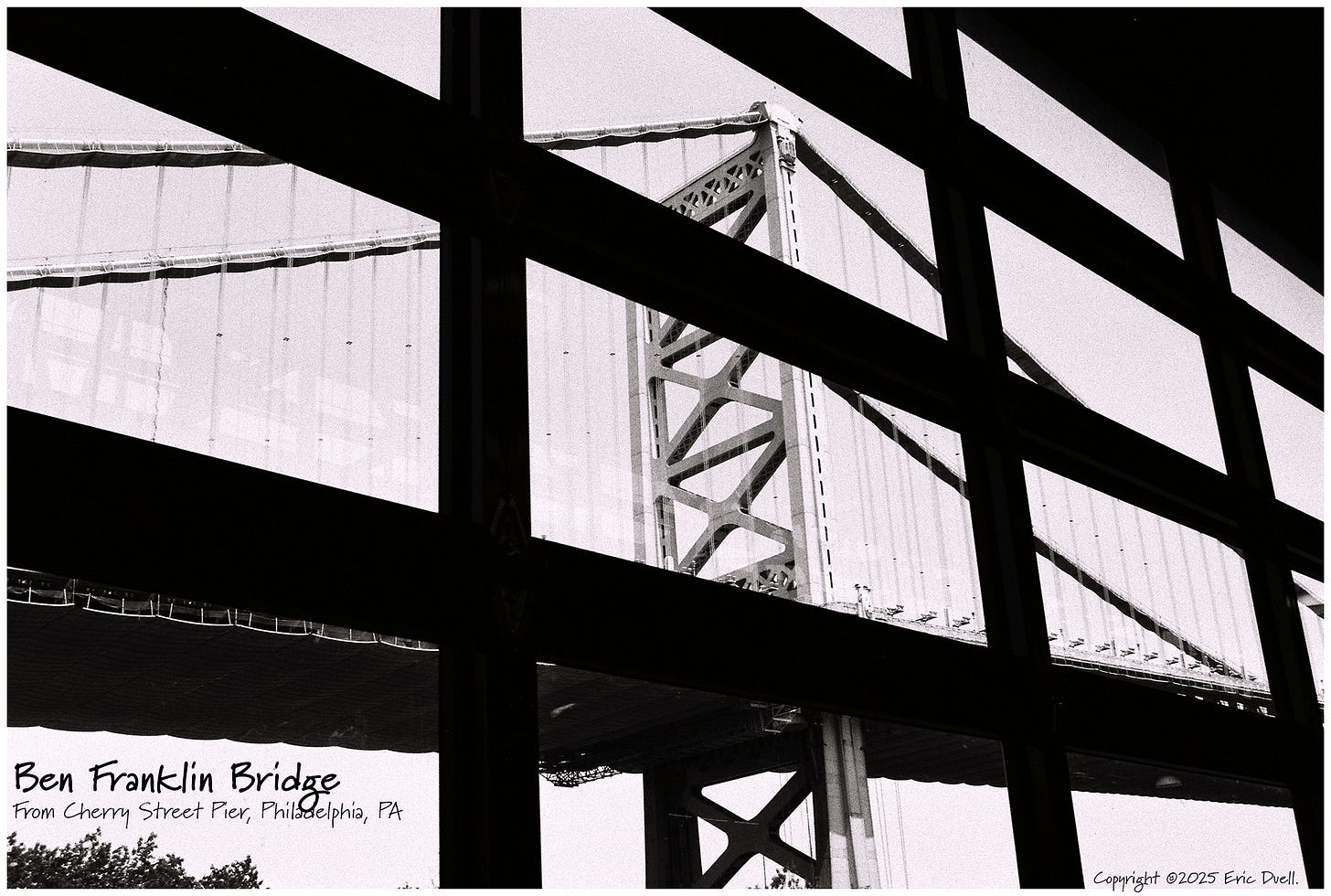

Photo Bonus

The Ben Franklin Bridge, framed up by the windows at Cherry Street Pier, Philadelphia, PA.