In this series

Generative AI tools are fast becoming indispensable in the workplace, and I believe AI literacy is now a required skill for both creators and consumers. But we don't yet have commonly accepted frameworks to guide us in this evolving space.

In last week's FM (Friday Morning) Reflection, Navigating AI’s Tensions to Create a Roadmap for AI Literacy, I began exploring several key issues in generative AI, as a way to expose and build a new set of guidelines to help us.

The first three tensions I discussed last week were:

Creator Responsibility vs. Consumer Literacy

Who holds the responsibility for discerning the authenticity of content—the creator or the consumer? Should creators explicitly disclose AI-generated content, or is it up to consumers to evaluate its authenticity? Here’s a proposed starting point:

Creators have a responsibility to establish clear disclosure rules and ethical guidelines for their publications

Consumers will need new skills to recognize AI-generated content

The reality is that AI-generated content can also be weaponized for deception, so it’s a “both and” situation.

Personal Voice vs. Synthetic Persona

This tension strikes at the core of identity and authenticity.

Do readers or listeners want to hear the “real me” or is an AI-generated clone acceptable?

At what point does AI-driven content creation start to replace human expression?

This debate extends beyond voice cloning to written pieces, social media posts, and even fully automated personas. If a brand or personality automates its content with AI—without human oversight—is it still them?

Disclosure vs. Comprehension

Simply labeling content as “AI-generated” is not enough if audiences don’t truly understand what that entails.

If people don’t grasp how convincingly AI can replicate voices or writing styles, a disclosure tag might not carry much weight.

The rise of AI-generated news, images, and interactions could lead to widespread erosion of trust in media.

If audiences can’t tell what’s real and what’s synthetic, even authentic content will be scrutinized and deemed to be suspect or false.

Continuing to Explore — Three More Tensions

Today, I’m taking this exploration a step further by examining how AI influences the entire chain of creation and distribution—from the original voice or persona to the final audience.

Individual Agency vs. AI Inertia

Core Question: When does AI assistance in creativity shift from enabling to overtaking an author’s agency?

AI can enhance expression, overcome creative blocks, and expand possibilities. It helps writers refine their ideas, generate alternative text, and craft more compelling narratives. Here’s the crux of the issue — there’s a point at which AI might start driving the content rather than merely assisting.

As I shared in my podcast On Reflection... Finding Authenticity in a Changing World, authenticity lies in whether the content reflects the creator’s genuine thoughts and intent. The risk with AI? Over-reliance on could lead to creators losing their unique voice and diminishing their skills, leaving them as editors of AI chat outputs rather than original thinkers…so that while content might be “authentic” it might not be novel or compelling.

Individual Performance vs. Commercial Interests

Core Question: How can we balance the commercial potential of AI voice replication with protecting individuals’ unique identities and performances?

The ability to replicate voices, images, and personas opens up exciting possibilities—personalized experiences, multilingual translation, and creative innovations—and:

Individuals should have the right to decide how and when their voice is replicated.

AI-powered replicas should be used transparently, particularly in contexts where audiences would otherwise assume they are engaging with a real person.

Ethical considerations must also extend to deceased individuals, ensuring their legacy isn’t misrepresented or exploited.

Perhaps some AI-enabled content producers should adopt a model akin to political endorsements: “I’m Eric Duell, and I approve this post.”

Utility vs. Credibility in AI-Generated Media

Core Question: How do we maximize AI’s efficiency in media creation without undermining trust?

AI offers compelling efficiencies: automating repetitive tasks, summarizing content, and enhancing accessibility. But with that efficiency comes several new challenges:

Who is responsible if AI-generated clips distort meaning?

Should AI-generated media be held to the same editorial standards as human journalism?

If errors or biases arise, who is accountable—the AI’s developer, the platform, or the user?

These are critical issues that, if left unresolved, will be tested in the courts rather than proactively addressed through thoughtful governance. The last point about liability is a real challenge it brings up very complex issues of law, free speech, and other rights that frequently pit one interest against another. The simple answer may be that we have to look at each case individually and in context.

Connecting These Tensions to AI Literacy

Just as we learned web literacy over the past several decades—knowing not to click sketchy links or believe every email—we must also develop AI literacy.

And by examining these new tensions, it’s clear that an AI Literacy Framework is urgently needed. In upcoming posts, I’ll continue mapping these challenges and proposing potential solutions.

As always, I invite your thoughts and collaboration on this evolving discussion. Have a great weekend!

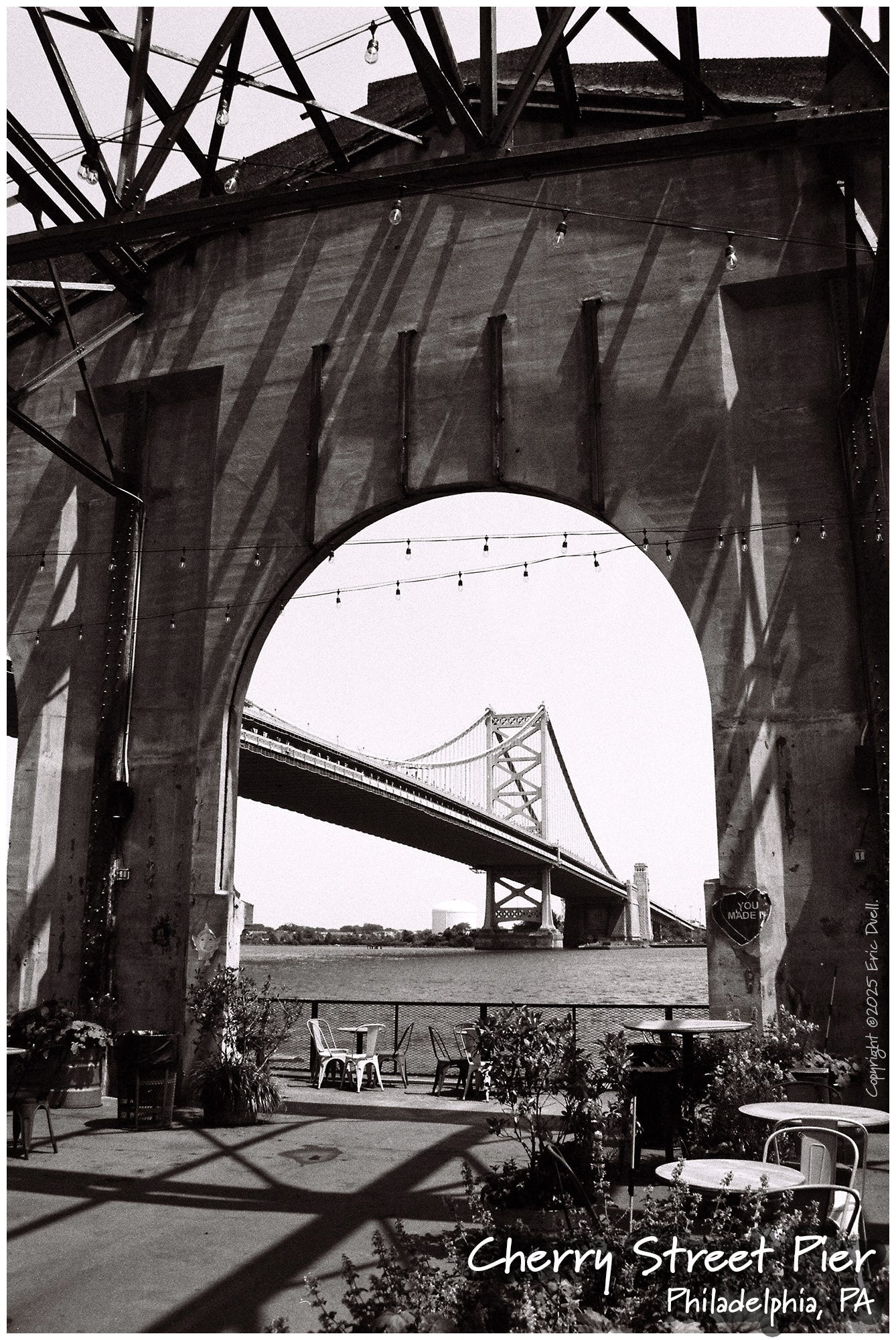

Photo Bonus

As I mentioned as part of last week’s Photo Bonus, the architectural framework o b building provides both strength and resilience. It this case, it also creates an enticing space for people to live – like at this lovely social gathering place at Cherry Street Pier on the Delaware River waterfront in Philadelphia. I hope that we’ll find the same within the future framework for AI Literacy that is developing here in this series of posts.